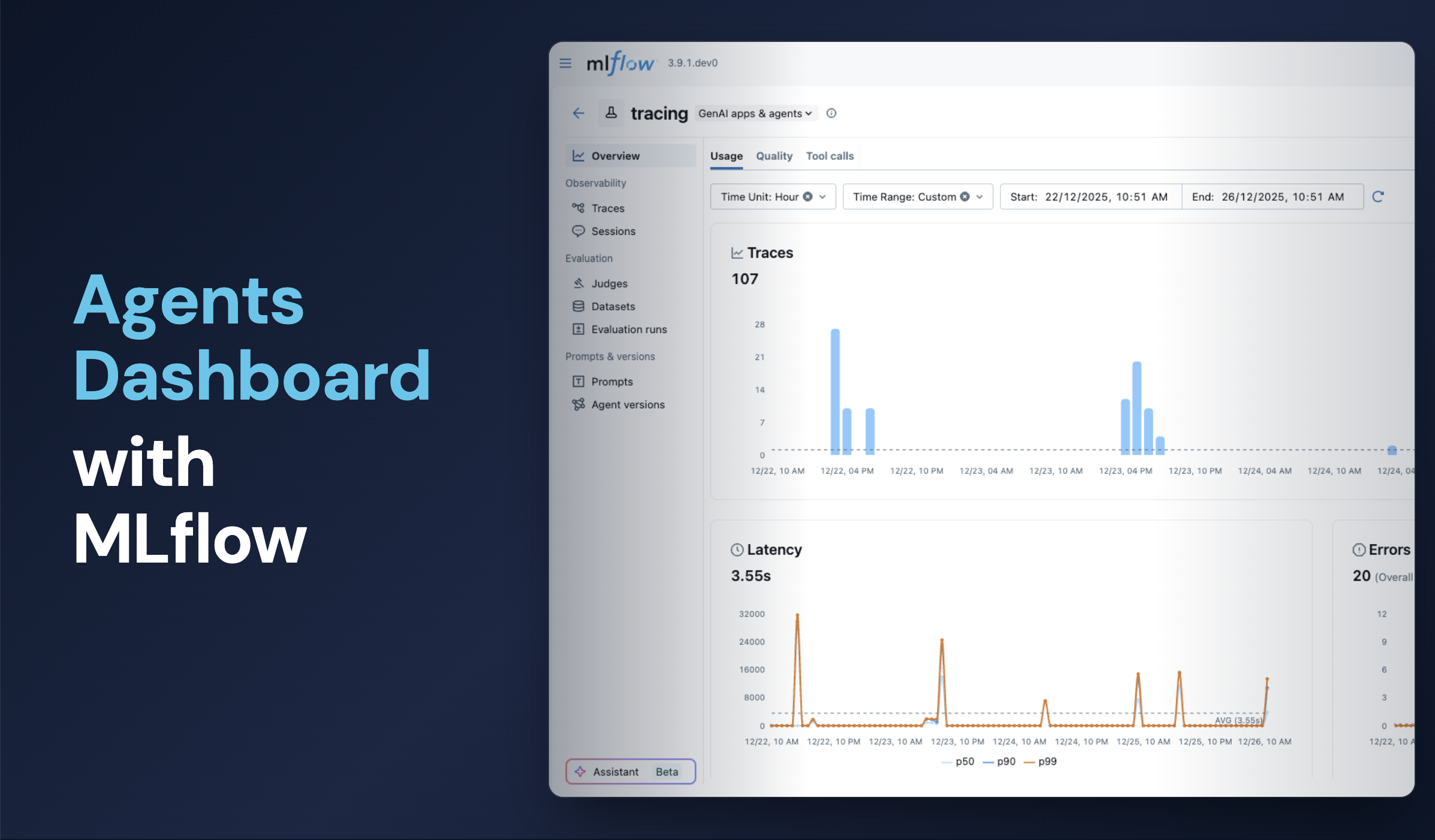

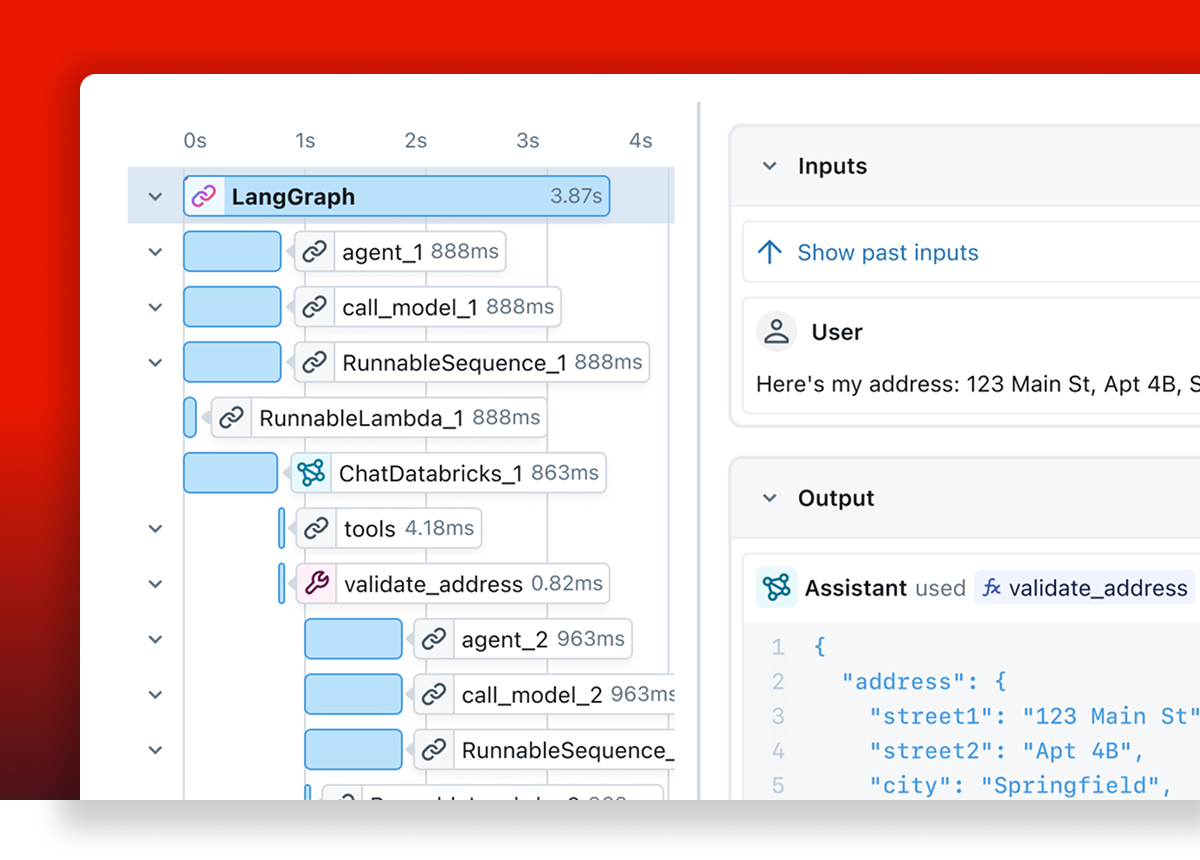

Debug with tracing

Debug and iterate on GenAI applications using MLflow's tracing, which captures your app's entire execution, including prompts, retrievals, tool calls.

MLflow's open-source, OpenTelemetry-compatible tracing SDK helps avoid vendor lock-in.

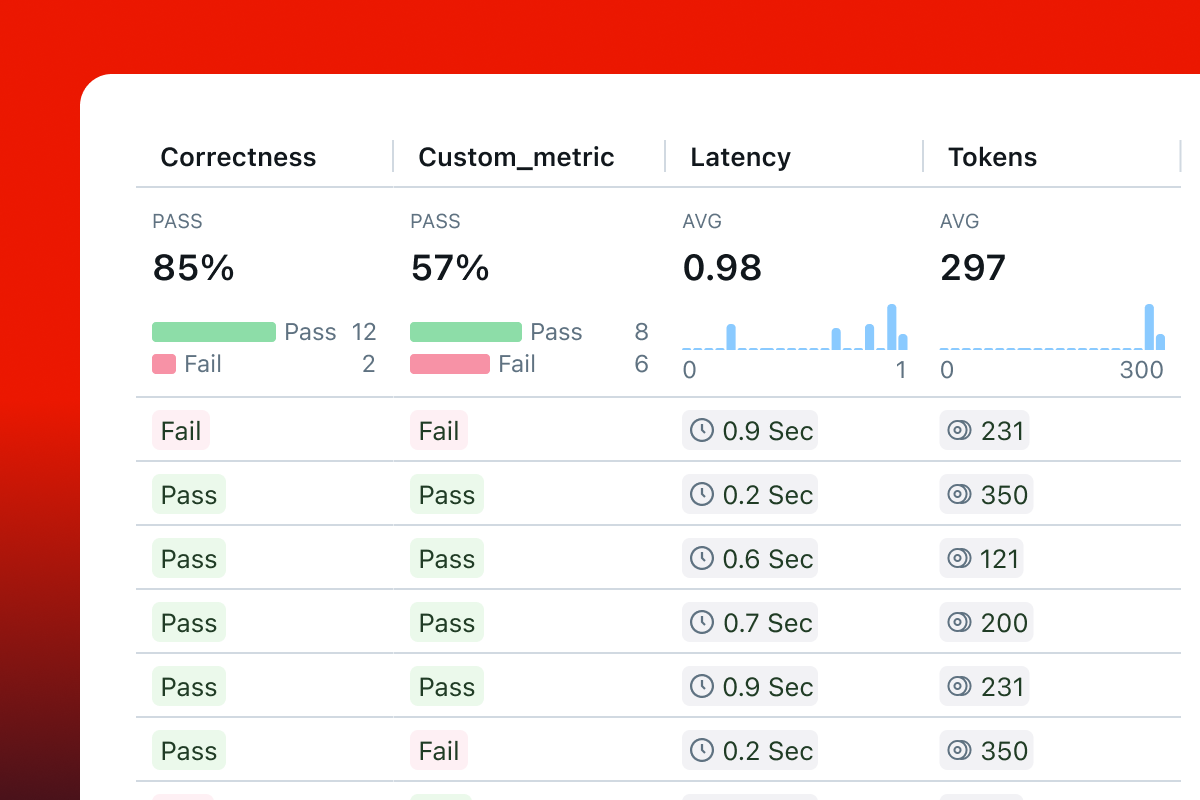

Accurately measure free-form language with LLM judges

Utilize LLM-as-a-judge metrics, mimicking human expertise, to assess and enhance GenAI quality. Access pre-built judges for common metrics like hallucination or relevance, or develop custom judges tailored to your business needs and expert insights.

Why us?

Why MLflow is unique

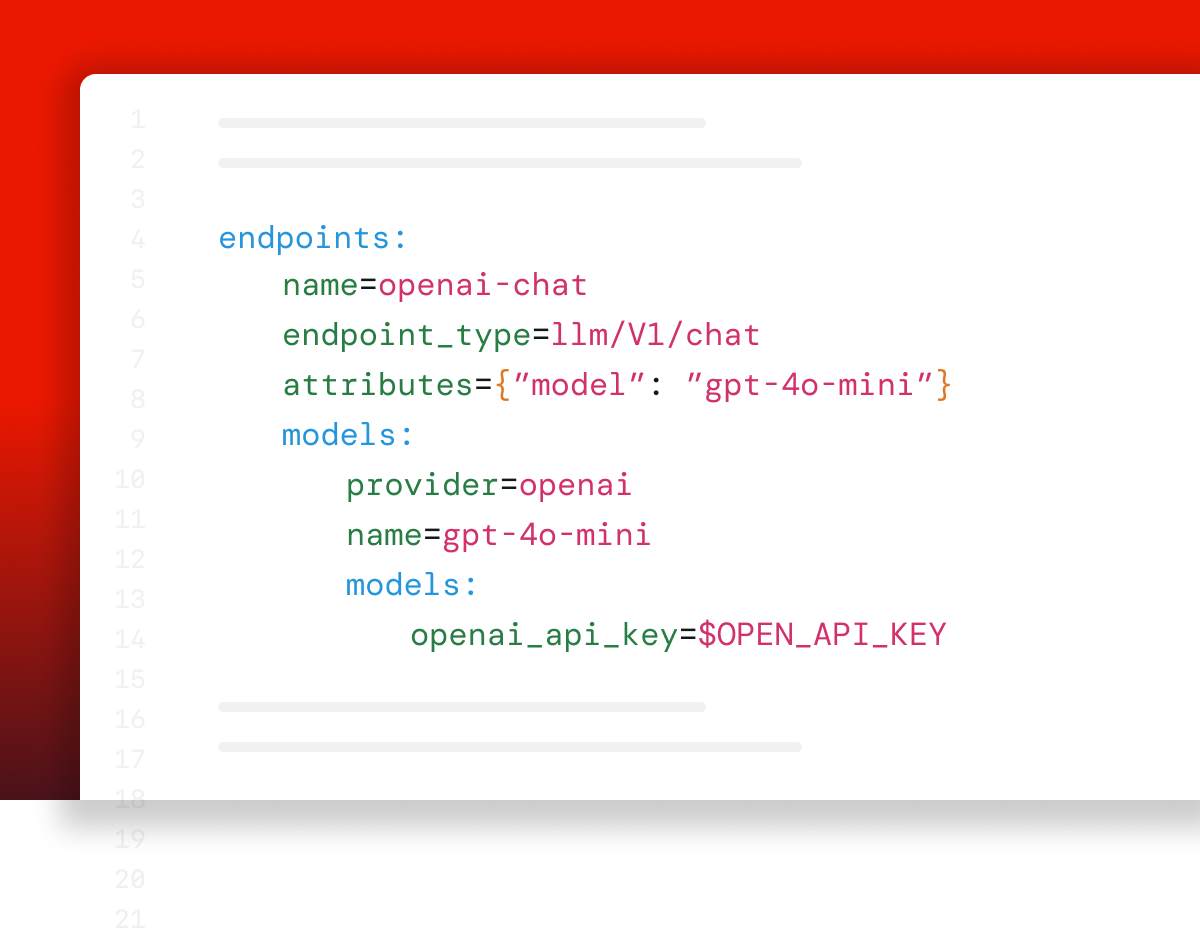

Open, Flexible, and Extensible

Open-source and extensible, MLflow prevents vendor lock-in by integrating with the GenAI/ML ecosystem and using open protocols for data ownership, adapting to your existing and future stacks.

Unified, End-to-End MLOps and AI Observability

MLflow offers a unified platform for the entire GenAI and ML model lifecycle, simplifying the experience and boosting collaboration by reducing tool integration friction.

Framework neutrality

MLflow's framework-agnostic design is one of its strongest differentiators. Unlike proprietary solutions that lock you into specific ecosystems, MLflow works seamlessly with all popular ML and GenAI frameworks.

Enterprise adoption

MLflow's impact extends beyond its technical capabilities. Created by Databricks, it has become one of the most widely adopted MLOps tools in the industry, with integration support from major cloud providers.

Get started with MLflow

Choose from two options depending on your needs

GET INVOLVED

Connect with the open source community

Join millions of MLflow users